🤔 Before we dive in: If you could be known for writing just one one-hit wonder, which one would it be?

Steven Greenberg wrote, recorded, and produced a song that went to #1 on the Billboard Hot 100 in 1980 and stayed #1 for a month. You know it. Everyone around the world knows it. Then after the hoopla faded, Greenberg went back to playing in a wedding band.

The story of “Funkytown” from the Third Story podcast (Apple, Spotify) is a fabulous example of creative determination, persistent curiosity, intense focus on craft, and gratitude. It’s a worthy addition to everything else we know about music made in Minneapolis.

There are many ways to lead creative teams.

I’m inspired by Rishad Tobaccowala’s “4 Keys to Leading Today,” in which he notes,

“Due to the rapid change in demography and technology the half-life of whatever one has learned rapidly decays and the fuel tank of competence needs to be continuously filled.”

Rishad suggests curiosity and skill building are some of the means by which modern leaders must lead in this era. That sentiment also holds true in my recent podcast interview with Hill Holliday’s Chief Creative, Dave Weist. If we’ve learned one thing since AI has infected and influenced creativity—and the operations and leadership of creative enterprises—it’s the requirement to keep learning.

Creative leaders can’t rest on their laurels.

But how much should you invest to learn? In other words…

Is an AI worth $200/month?

You’ve heard of ChatGPT Pro, the “deep research” model that costs $200 per month. As Nate Jones says, “it’s like playing with the future.” Worth noting, the $200/mo “Pro” option is really three things:

The o1 model itself (launched December 5, 2024)

Access to Operator, an “agent” that uses its own browser to perform tasks for you (launched January 23, 2025)

“Deep Research” capability (launched February 2, 2025)

Now, one way to answer the prevailing question is to consider how much you currently spend, per month, on computing—and what you gain as a result. What’s the monthly “cost/benefit” of your laptop’s value, software subscriptions, access to platforms, etc.? In other words, what do you pay per month to gain access to tools which help you deliver value to others? You obviously need a decent laptop and internet to leverage most of these features, but the point is—can a subscription like “Pro” help you deliver more than $2,400/year value to your clients and business? (And let’s assume you know how to write off much if not all of the investment on your taxes.)

ChatGPT’s deep research has been promoted as a means to propel medical investigation, address complex coding, and help solve arcane mathematics problems. But what about the majority of my readers, who focus on subjective topics like strategic insights and creativity? I plunked down the $200 to find out (in other words, I just toggled a switch in the ChatGPT app on my phone). So here goes.

I’ve spent the past six days using deep research to help me develop strategies and insights for various marketing projects—from new business research to audience analysis to help distilling creative briefs. I have not been using the deep research function to develop creative ideas. That’s not what it’s for. I wanted to see how the $200/mo model faired while delivering consulting work. So I dug up prompts I’ve used for previous assignments to contrast how ChatGPT’s deep research delivers versus ChatGPT 4o, Claude Sonnet 3.5, and Gemini’s latest model.

I concur with Casey Newtown’s results.

“While great consultants can undoubtedly still produce much better reports than this agent, I suspect some of them might be surprised how good deep research is even in this early stage.”

Or as Wharton’s Ethan Mollick puts it via his analysis:

“For the first time, an AI isn’t just summarizing research, it’s actively engaging with it at a level that actually approaches human scholarly work.”

Can deep research prove useful to those of us charged with creative and strategic assignments? Here’s an example of what I did to find out. Let’s assume we’re in a strategy role and there’s a pitch opportunity in the dairy snacks industry, and you’ve never worked in this space before. So you might write a prompt like this:

✏️ You’re an expert in marketing. Help me develop competitive intelligence in the dairy foods category related to refrigerated snacks. On the industry side, I want to understand the major manufacturers, their brands and latest products, and which retailers (by key markets) tend to carry and promote the newest dairy snacks. For consumers, help me understand the demographics, psychographics and media habits of US adults most likely to purchase refrigerated snacks. I’d like to identify potential white space for new refrigerated dairy snacks.

First I fed that prompt to my typical AI interns to set a baseline. Each of these results took a few seconds.

ChatGPT 4o - 533 words

Claude (Sonnet 3.5) - 280 words

Google Gemini (2.0 Flash) - 680 words

I’ve worked in CPG and dairy. Those results were generally fine, but not particularly inspiring. You’d have to refine prompts and continue to elicit anything truly useful.

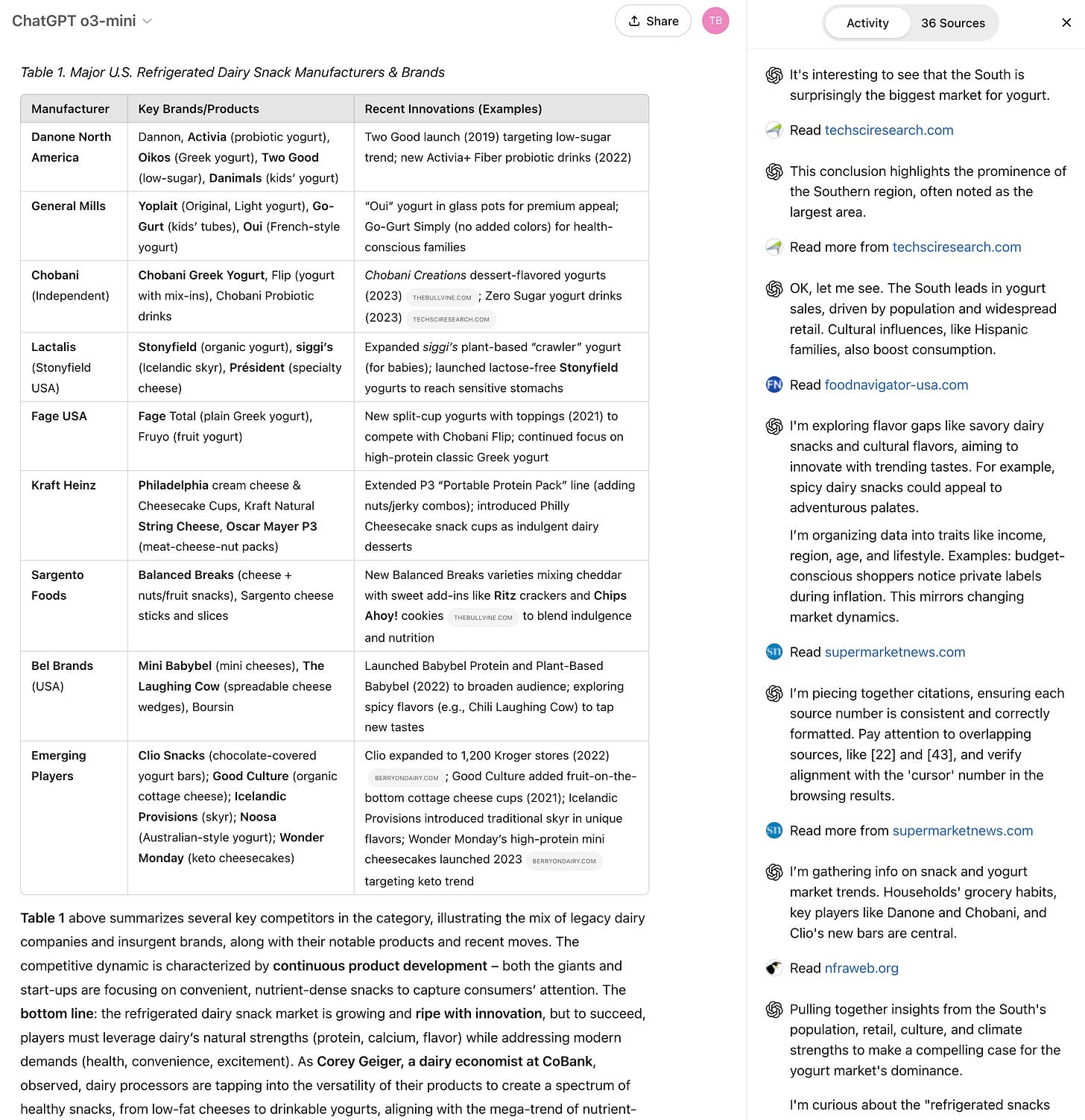

Now here’s the response from ChatGPT’s deep research using their “3.0 mini” model. The output took 10 minutes to render, and delivered 11,267 words.

Note how this was the only tool, of the four, which asked clarifying questions before getting to work. Notice the “conversation” the AI is having with itself on the right side as it refines its research. I’ve definitely noticed errors here across my range of tests, but also intriguing segues as the research unfolded.

But at the end of the day…

Ten minutes.

11,267 words.

If you’re under the gun, and/or new to a category—this kind of result might seem a bargain at $200. How much more effort would it take you to develop the same using search and the “free” AIs?

I’ve also been watching others work with ChatGPT’s deep research:

Mark Kashef gives a thorough analysis and contrasts the model against Gemini and Perplexity

Brittany Long has it research quantum computing

Igor at the AI Advantage documents 12 use cases; the last one on federal procurement practices demonstrated intriguing value

One thing is clear using deep research: Prompt writing still kind of matters. It appears OpenAI’s President Greg Brockman agrees. Think of it this way: If you were instigating work with an intern, you’d likely draft relevant context, and maybe even think through the problem you want them to solve so your assignment is less of a guessing game. The same holds true with this type of “reasoning” AI, at least for now.

Okay, fine—is ChatGPT Pro worth $200 a month?

I wish I could give you a single, definitive, unified answer.

In all candor, I’m not going to pay for month 2. Mostly because I don’t see a workload coming which would warrant the expense.

But if I was working solo, repeatedly responsible for lengthy analysis and strategic outcomes, and struggling to balance multiple streams of work—then $200/mo would seem a bargain given the current results. A single strategist who knows what they’re doing could make a lot happen from the investment.

Let’s be clear: It’s not a panacea. It’s not perfect.

You still need to play the role of editor, curator, proofer and fact checker.

And there are critics who are dead set against deep research; who think its results are garbage. Ed Zitron isn’t having any of the generative AI hype. As it relates to ChatGPT’s $200/mo option, he writes,

“Deep Research has the same problem as every other generative AI product. These models don't know anything, and thus everything they do — even ‘reading’ and ‘browsing’ the web — is limited by their training data and probabilistic models that can say ‘this is an article about a subject’ and posit their relevance, but not truly understand their contents. Deep Research repeatedly citing SEO-bait as a primary source proves that these models, even when grinding their gears as hard as humanely possible, are exceedingly mediocre, deeply untrustworthy, and ultimately useless.”

Maybe so.

But my experiences paint an intriguing and optimistic picture of the future. In many ways, our task as creative leader is to peak around the corners, the better to guide those working with us. If my results are any indication, tools like ChatGPT’s deep research will provide increasingly remarkable value.

AI+Creativity Update

✏️ For my money, Ryan Broderick comprehends and explains Internet culture better than anyone. From Monday’s post, “The Age Of Influentialism,” he writes,

“We’ve now found ourselves amid another shift in mass communication. One where algorithms successfully replaced ink and airwaves. And, just as 20th-century mass media created the concept of the consumer a hundred years ago, so too has this current shift redefined how we interact with the world. And accepting that this shift is permanent, in the sense that we can’t simply reverse it, is, I’m convinced, the only way forward.”

🏈 It’s been over a week. Does criticism of Kendrick Lamar’s 2025 halftime performance resemble the frustration aimed at Bob Dylan in 1965 when he “went electric” at the Newport Folk Fest? As

wrote, "Kendrick Lamar’s halftime show was a nuanced critique of America that slid right under a lot of noses. He was saying, America is a game and we’re all trapped inside of it.” My take: Seems silly to get angry at an artist for doing what artists are supposed to do.👦🏼👴🏼 Future You from MIT uses AI to help you have a conversation with you in the future (explainer video).