159: When something significant happens every day

My HeyGen buddy Wayne will take your Zoom meeting; lots of Adobe AI news; Claude is an agent; NotebookLM evolves; Watkins, Mollick, Amodei write words

We come by the exhaustion honestly.

Not a day seems to go by without something audacious, worrying, surprising, or inventive arriving to disrupt the status quo. This is what makes AI more distinct than previous cultural changes—first the Internet and then mobile/social. The speed of revelation, and the broad diversity of AI impacts appear endless.

Fortunately, we won’t have to attend video meetings much longer.

HeyGen has joined the meeting

HeyGen, the generative AI video platform, is rolling out interactive video avatars who can attend Zoom meetings on your behalf. Here’s Wayne and I getting acquainted before I unleash him on my clients.

Smooth, right? 😆

But we have to remind ourselves of an AI axiom: This is the worst version of the technology we’ll ever experience. Soon enough, Wayne might actually represent you or I with enough nuance, respect, and imagination to pass muster. Actually, the avatar will look like me or you or however we want it. And its training data will be whatever we provide. (And if you’re thinking this could conjure impersonations, misinformation, and scams trust your instincts.)

Adobe AI updates

Meanwhile, Adobe’s been offering the ability to leverage AI to synch your voice with one of many different animated characters inside Express. You select the size (Insta, LinkedIn, FB, etc), the character, and the background—upload or record your VO…and voila.

Speaking of Adobe, their annual creativity conference was last week. AI was infused within and across everything—from social media production, to video production workflows, to photography editing. But it was their future-oriented R&D sneak previews which stole the show:

Clean Machine — uses AI to detect and remove flashes from video (which is ridiculously cumbersome to edit otherwise). In other words, mistakes or challenges during video capture will matter less and less.

Remix A Lot — Listen to the audience reaction as AI interprets a pencil sketch and converts it (almost instantly) into an editable layout, matching the hand drawn font pretty well; then an AI “layout variations” button converts the single layout into multiple other aspect ratios and formats. TikTok loved the part of the Remix demo where the designer says she lost her source file, then used a .png as reference to completely alter an existing Adobe Illustrator design to mimic the style, colors and layout from the reference .png. This is the bread and butter of graphic design—the entire rationale for platforms like Canva. Which opens yet another AI axiom: What new tasks can we evoke, since AI can eliminate the old?

Turntable — What if AI could rotate, pan and skew a 2D illustration in three dimensions? So, imagine you’ve got one character facing straight ahead, and another facing to the side. Instead of re-illustrating one, you use an AI “turntable” to pivot one of your illustrations so the perspectives match. That might not seem like much, but as this TikToker explains, this demo could be huge for the entire world of illustration.

Who knows when, or how these “sneaks” will emerge into production. But it’s clear Adobe sees where the money leads—AI tooling which simplifies, streamlines and removes former complexity for myriad creative efforts.

The new Claude is different yet the same?

OMG could someone please talk with Anthropic about giving an update the exact same name as the most recent model? Apparently Claude 3.5 Sonnet is now called Claude 3.5 Sonnet (New).

But under the hood there is significant difference—much of which is experimental, so you and I can’t access it yet, but that’s why they make demo videos.

Long story short: An AI such as Claude is essentially a (multi-task) agent, and will increasingly have the ability to:

Take a screen grab of your desktop

Read the text available (i.e. file names, app names, etc) and infer content and capabilities on your computer — but you can see a future where screen grabbing is just silly, and the AI will simply read whatever’s on your screen

Then control your cursor to navigate and take actions

In the demo Claude fills out a vendor request form, and its autonomous activity is diagrammed and discussed by the AI in a sidebar—“Now I’m doing X.” So you can track along with whatever it is you’ve asked Claude to perform.

As Ethan Mollick puts it in his early-access review, “This represents a huge shift in AI use.”

“AIs are breaking out of the chatbox are coming into our world. Even though there are still large gaps, I was surprised at how capable and flexible this system is already. Time will tell about how soon, if ever… but, having used this new model, I increasingly think agents are going to be a very big deal indeed.”

Of course, the issue isn’t that Claude’s evolution is possible. The issue will become what we humans do as a result.

NotebookLM podcast enhancements

Google is listening and moving quickly.

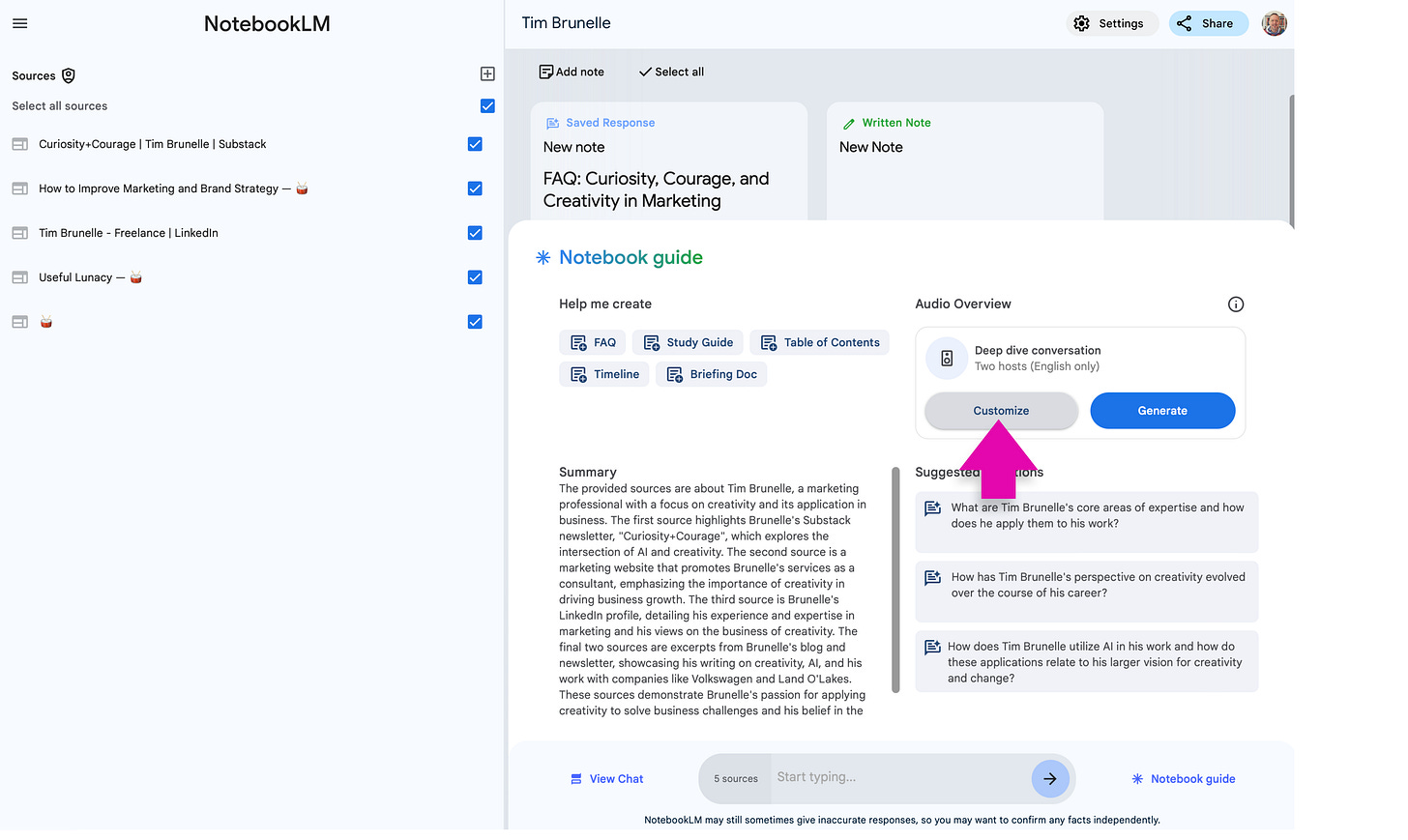

A month ago we were talking about the new “podcast” audio overview capability within NotebookLM, the multi-faceted AI platform. Click a button and Google’s AI generates a podcast with a male and female co-hosts who pleasantly chatter on about whatever material you’ve loaded up. You could download the episode but that was it.

Now you can customize the audio overview prompt. You’re limited to 500 characters. I’m assuming the underlying system prompt remains, i.e. the uneditable prompt guiding the podcast demeanor and aesthetics.

If you’ve got 15 minutes and 40 seconds, give the entire update a listen:

Three worthwhile perspectives

1️⃣ Dario Amodei, CEO of Anthropic (the team behind Claude), authored Machines of Loving Grace. His money quote:

“I believe that in the AI age, we should be talking about the marginal returns to intelligence, and trying to figure out what the other factors are that are complementary to intelligence and that become limiting factors when intelligence is very high. We are not used to thinking in this way—to asking “how much does being smarter help with this task, and on what timescale?”—but it seems like the right way to conceptualize a world with very powerful AI.”

2️⃣ Ethan Mollick, Associate Professor at Wharton and author of Co-Intelligence, wrote Thinking Like an AI to commemorate the coming two year anniversary of ChatGPT’s arrival and all that has followed. He makes it clear:

“The real way to understand AI is to use it. A lot. For about 10 hours, just do stuff with AI that you do for work or fun. Poke it, prod it, ask it weird questions. See where it shines and where it stumbles. Your hands-on experience will teach you more than any article ever could (even this long one).”

3️⃣ Educator Marc Watkins published Engaging With AI Isn’t Adopting AI to make a keen point about literacy. His key point (bolding mine):

“A number of folks are upset at starting a culture around AI literacy in first-year composition courses and I’m not quite sure why. Engaging with AI doesn’t equal adoption. We can’t hide from AI, though I’d argue that’s the path many are attempting to take. The text-based generative tools used by students in apps like ChatGPT pale in comparison to the multimodal generative AI that can mimic your voice, image, likeness, and mannerisms. Just because you aren’t interacting with that form of AI when marking a paper or giving feedback doesn’t mean your students won’t see that elsewhere. AI literacy isn’t advocating for a specific position about AI—far from it. Rather, it aims to inform users and equip them with knowledge while removing the hype around these tools that are often marketed as magic to our students.”

🍁🍂 Perhaps even more worthwhile, I wised up and spent a few moments outside yesterday, appreciating the gorgeous ochre, carmine, rufous, orpiment, fulvous, coquelicot and incarnadine colored leafs as their chlorophyll retreats.