131: Seeing in weird but useful new ways

A second final AI project using Firefly, Midjourney and data + lots of AI+Creativity links

🎧 Written to Re-TROS live on KEXP. Thanks, Rick! And then Louis Cole Sucks (live) with John Scofield and Mono Neon. Thanks, Jake!

We had a really awesome book club discussion last week about Ethan Mollick’s Co-Intelligence. Thanks to all the academics and marketers trying to make sense of culture and tech disruption by reading books! We’re going to regroup again in a few weeks. Stay tuned.

🤖🪑 AI for Artists and Entrepreneurs: Steve Bonoff

Last semester’s class included four Continuing Education students mixed in with the undergrads. I love this blend of ages and perspectives in a classroom. One of our CE students, Steve Bonoff (LinkedIn), came into final projects with a compelling business case:

“How do I help interior designers see in a way they’ve never seen before?”

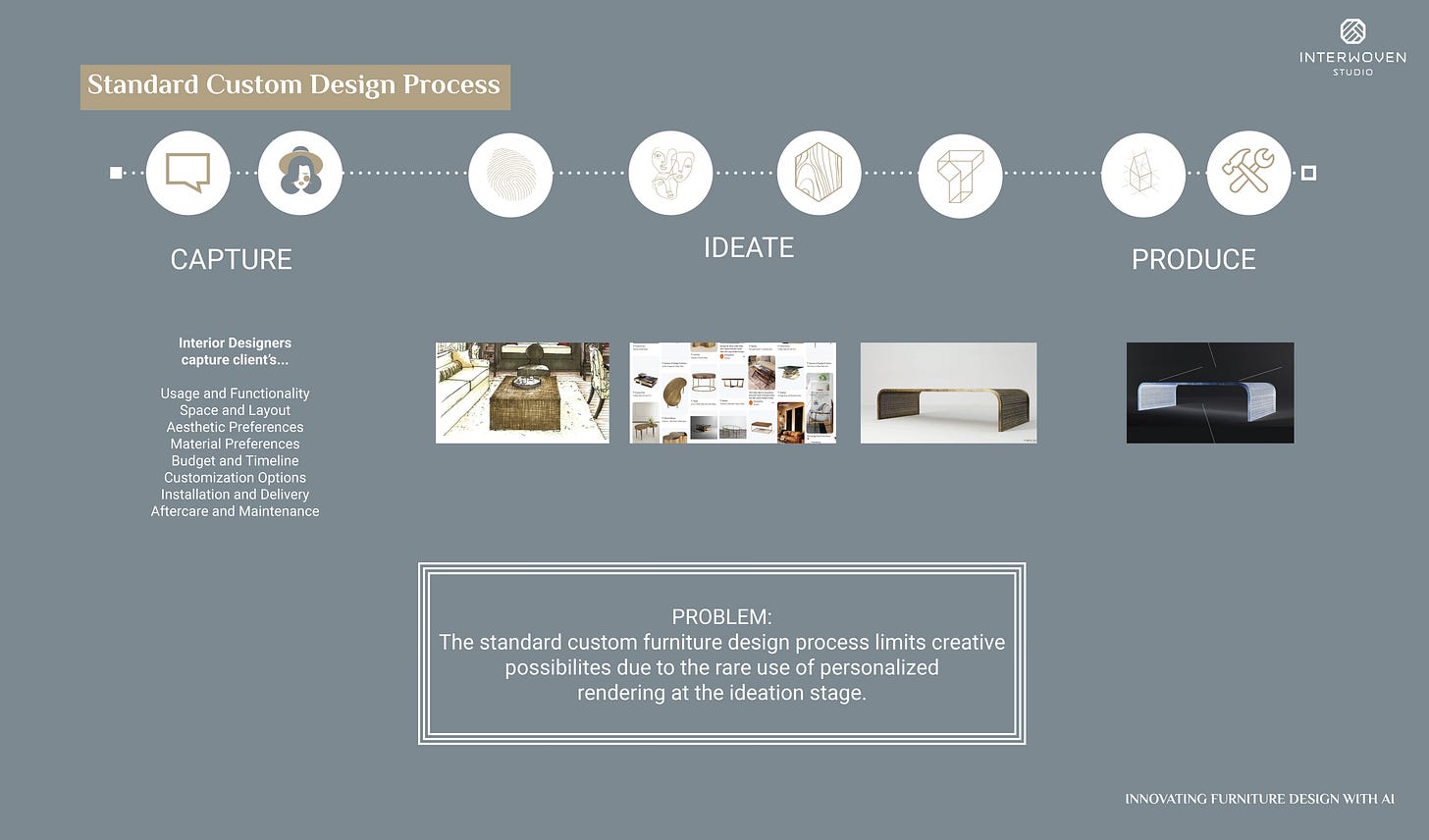

In the realm of custom furniture design—could generative AI help expand and expedite creative decision for a new fabrication substrate while simultaneously reducing the cost of personalized rendering? Questions like these sit at the heart of many “AI + Creativity” scenarios. We want to use AI to increase the volume of a specific creative activity, and/or unlock the narrow application of a specific creative activity.

In Steve’s presentation he spoke about Adobe Firefly’s “Structure” setting and its corollary within Midjourney as the secret sauce to his solution. I give Steve a lot of credit for researching visual prompting techniques on YouTube, discovering someone who seemed to have figured it out—and then hiring that person to help hone a solution. As Steve discovered, the current state of AI isn’t merely turnkey—in his use case, a custom database and structural reference images became necessary to fuel his generative approach. Here’s Steve’s presentation:

And here are the two key slides from Steve’s talk.

Congrats, Steve, on your work! Interwoven Studio launches June 17.

AI+Creativity Update

🙋🏽 I regret missing the second annual BrXnd AI conference, but am grateful they posted Tim Hwang’s 16 minute talk. We’re segueing into weirder territory when it comes to LLMs; in the sense we humans are struggling to place, associate and relate with a technology built entirely on our own behavior. All that training data is us—which helps explain why those “take a deep breath and solve problems and requests step by step” or “help me solve this and I’ll give you a tip” prompt tips actually improve outcomes. This video is definitely worth your time.

🤖🔈 ElevenLabs released “text to any sound imaginable.” Pretty useful if you need sound effects. Expect something like this to become normalized inside Descript, Adobe or Apple’s production platforms.

🤖🎶 Suno’s v3.5 is now available to everyone and includes “text to 4-minute song” generation. I have to admit both surprise and concern with this tool. If my creative need includes speed and low-creative fidelity (i.e. “good enough to make the point is good enough”) then this approach to music creation works pretty well. The question remains: Will enough people need and want this approach to music creation to sustain the business model?

🤖🤔 Berggruen Institute’s Noema publication hosted this interview (video version) with Former Google CEO Eric Schmidt—useful for outlining three fast-evolving AI capabilities: 1) an infinite context windows, 2) chain-of-thought reasoning in agents, 3) text-to-action (specifically within coding). Consider take his sense of urgency (and China-fearing) with a grain of salt.

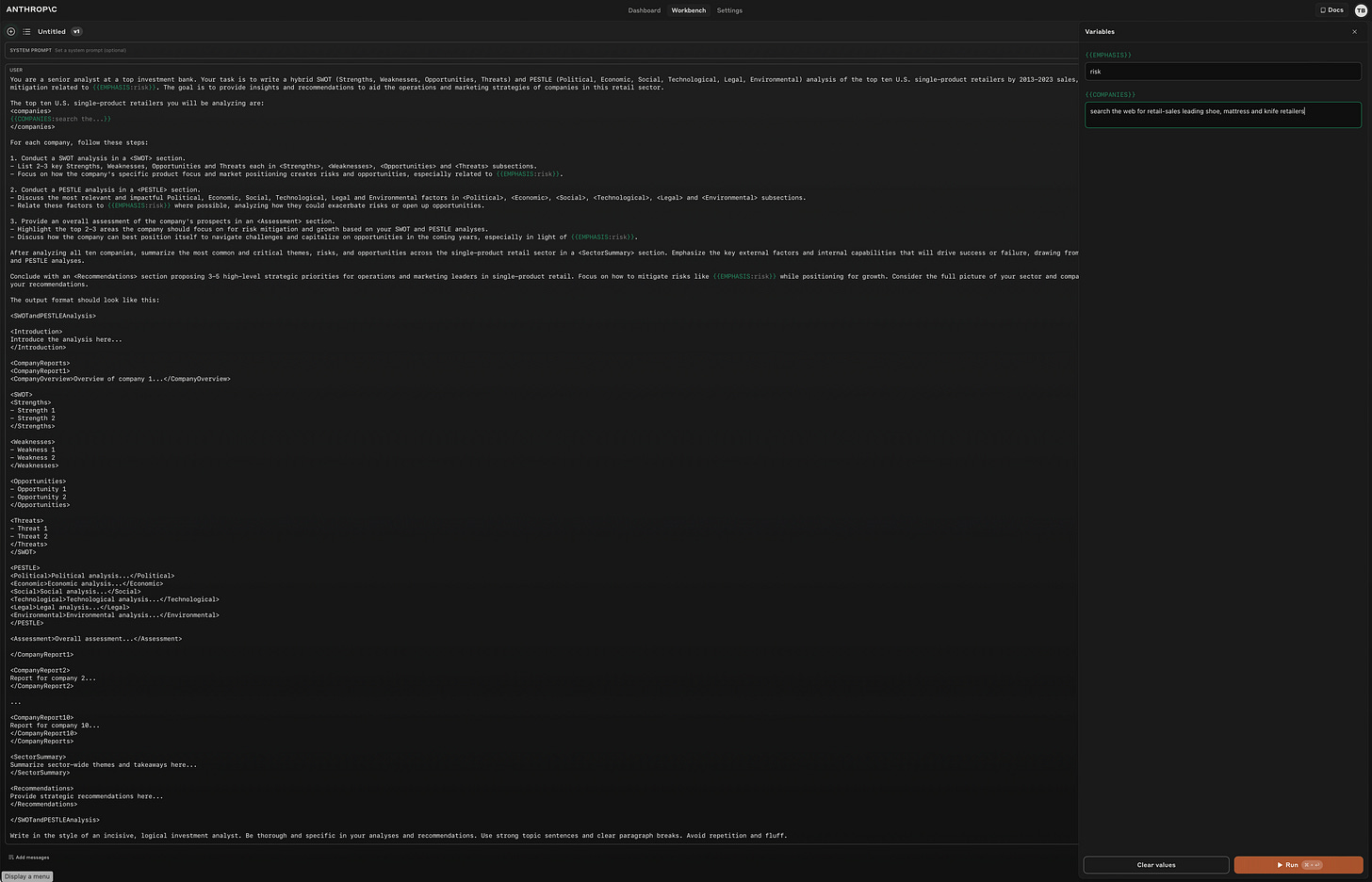

🤖 Prompt engineering has always been kludgey at best, and tricky for most. Now we have Anthropic’s new Prompt Generator (which utilizes their Claude LLM). Anthropic’s approach suggests the era of “AI will write your prompt for you” might be at hand—but don’t get too excited just yet. Here’s “BrandNat” from TikTok describing the outcome. And here’s my quick demo, focused on brand strategy:

After clicking “Generate Prompt” I got kicked over to the console with Claude’s more robust and specific prompt expanding on my input. If you didn’t know an LLM could function this way or didn’t know how to instruct a chatbot for an elaborate request, this “prompt refinement” is both illuminating and useful. We went from my ~55 word instruction to this lengthy prompt.

Then I clicked “Run” (upper right) to see how Claude would respond to Claude’s prompt of my…prompt 🤷🏻♀️.

Which triggered a modal window requesting variables, i.e. what do I want for {emphasis}? Clearly, risk. And Claude asked me to name {Companies} but I want the LLM to do that for me, so let’s see how “search the web for retail-sales leading shoe, mattress and knife retailers” works out…

Okay, not great.

The markdown didn’t apply, and there appears to be an output limit.

But I can see how a console like this points to a more useful future in which you or I having to comprehend arcane prompting techniques will disappear. Hasn’t make the technology invisible always been the point, anyway?

😕 Just getting up to speed on Adobe’s apparently weird new ToS which suggests they want the right to scan all of your data. Which quite a few on social media quickly inferred to mean even work under NDA. Notice the hard line: Agree, or Don’t Use the Product.

Here’s one TikTok on it. I currently license inside MCAD’s agreement, and have not seen the new terms popup in any of my Adobe apps. I got the screen grab above from a lengthy VentureBeat article including Adobe’s response 👈🏾 If you’re going to read anything, read that story. Long story short: Legal terms are storytelling. They can build or destroy brand equity. In the race to “clarify,” as Adobe claims, its position on cloud content, AI, and training data they’ve set themselves up for interpretation that harms their brand. Here are Adobe’s ToS.