066: Less labor? More hallucinating?

Books have been migrated, and Creativity + AI insights summarized

I moved my office over the holiday weekend. Up one floor from the basement, where it originated at the onset of the pandemic. I get to look out at nature now through one window, and down the driveway with another. Still have two more shelves to empty, move, and reset in what used to be my oldest son’s bedroom. (He’s now in the basement where we won’t complain so much about the low end rumble of his music production.)

We are preparing for an elevator installation. My youngest is disabled, and uses a wheelchair. Also, he’s not getting lighter. If the plans work out, my new office will become the portal for access to the “main” floor. (The shaft will be installed on the exterior, running from the basement to the second floor.) So this weekend was a jumble of preparing for what we hope will occur; as much as one can predict home renovation.

Yes, I 💙 books.

The good news is I am paring down the collection. I do not need late 1990s printing technique manuals, for example. My wife kindly suggested I organize them all by spine color. But I’ve decided to loosely organize them by:

Marketing/Advertising

Psychology/Behavioral Economics

Screenwriting/Film making

Writing

Music

Books I Fully Intend to Read But Haven’t Yet 😬

Everything Else

If you and I wind up on a Zoom call, I can walk you through the method to my madness.

No creative entrepreneurship class this week. On September 11 we’re going to dive into accelerators and incubators including Y Combinator and Techstars with a veteran of both, Marty Wetherall.

So this week I figured we could talk about (creative) labor, given the holiday, and ways in which AI is influencing how we think, strategize, generate and evolve ideas; i.e. How creativity works.

The best part about new things are all the other writers writing about the new things.

I had 23 browser and email windows open, each with some scintillating perspective on Creativity + Artificial Intelligence. When I wasn’t slogging books from one floor to another, I was consuming pixels. The Internet is amazing.

0️⃣ Every ad agency is using AI in some manner now. AdAge continues updating its analysis (paywalled) of how the networks and holding cos are strategizing and organizing. Everyone has launched proprietary tool(s), or deals with Google and/or OpenAI, or both. Generally, focus is either oriented towards summarizing complexity (i.e. retail media optimization), or unearthing value from data (i.e. predicting consumer trends). A few instances of generative AI within production pipelines were noted. File Under: You’re Not Alone. Everyone is investing resources because they probably agree with this next point.

1️⃣ File Under: He May Be Right? He May Be Crazy? Here’s a guy who has owned or owns lots of ad inventory: Media mogul Barry Diller asserts, “Generative AI is…going to redefine, reconstruct or lay waste to advertising as we know it.” Perhaps all of the above. Which means you should try and figure out what that means for you.

2️⃣ Because brands are not slowing down either. Walmart is enabling 50k office workers with a proprietary generative AI application. File under: New Phone, Who Dis? Not in the reporting—what sort of training and cultural incentives Walmart employees will have aside from mere access to LLMs in order to take advantage.

3️⃣ File under: Everything Old is New: The Neuron suggests, “If you're running a business, double down on crafting a distinct brand.” Ha ha. Of course. But now because, “You need to break away from the crowd of AI-generated content.” Begs the question: Have you and your brand team considered what equals distinction in your space, for your audiences, in late 2023? In other words, what’s the obvious “AI space” your brand can’t own any longer?

4️⃣ Or at the very least, embrace the weirdness AI can enable, writes Ethan Mollick. File under: Think Different. While, “it is completely understandable that we have trouble with the weirdness inherent in working with AI,” he observes; we need to learn how to break away, “from the common analogies we use to make AI seem normal.” AI isn’t normal, and the sooner we stop trying to constrain it within familiar terms, the better.

5️⃣ File under: Wait, Didn’t Seth Godin Write That Exact… Yup. He did.

(Mollick also documented several research studies seeking to clarify how AI can turn bad writers into less bad writers, and help inspire those with lower initial creativity to be more creative. More of this, please.)

6️⃣ Okay, so, “LLMs are not databases and not deterministic, and they don’t appear to have any underlying structural understanding of what they talk about,” suggests Benedict Evans (paywalled - September 3 column). Key words, and not a good band name: Underlying Structural Understanding. “So they don’t give you an answer to your question: they tell you what answers to questions like your questions would tend to look like,” (italics mine). Louder for those in the back: An LLM isn’t generating thought, it is generating patterns.

Which is a potent segue...

7️⃣ What if hallucinations are the feature, not a bug?

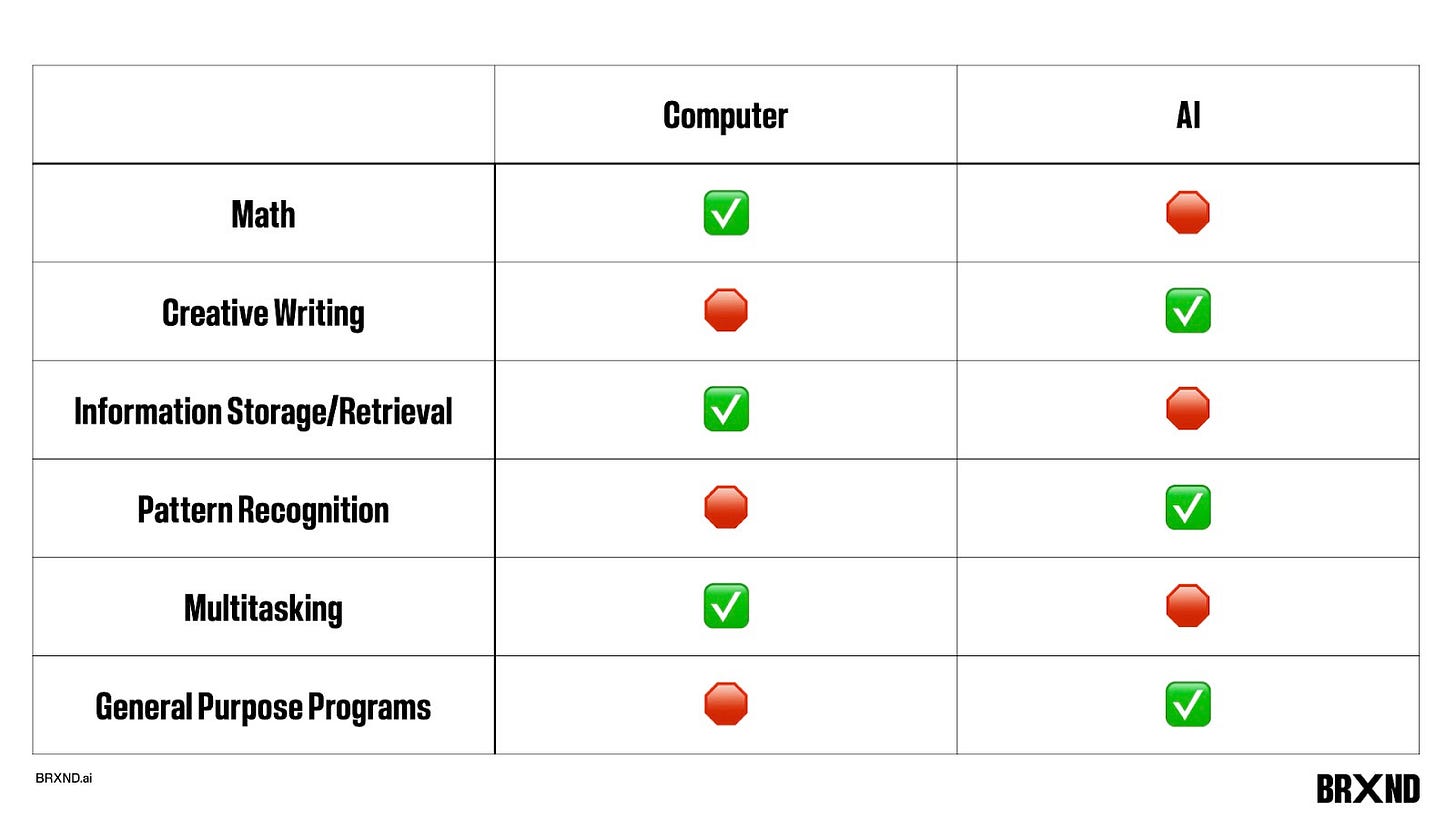

Way back in AI+Creativity history, which was May 2023, Tim Hwang gave a brilliant presentation at Noah Briar’s BrXnd Marketing AI Conference. It’s just 20 minutes, quite amusing, and very much worth your time. In it, Hwang argues, “LLMs are bad at everything we expect computers to be good at,” and “LLMs are good at everything we expect computers to be bad at.”

Briar helps clarify in his recap:

It’s the same sentiment Mollick makes above, i.e. to assert the value of AI hallucinations and weirdness over whatever familiar analogies or metaphors pundits have tried to layer onto AI to make it seem less threatening.

Can we leverage LLMs to more easily achieve creative outcomes which are otherwise very weird, subjective and challenging—and potentially more valuable? Less “how might an LLM mimic my limitations?” and more, “how might an LLM open my capability to see and speak in new ways?”

The most useful illustration of Hwang’s perspective is when he demonstrates a “Sliders and Knobs” concept within ChatGPT. Prompt the LLM to imagine a continuum for a quality like “hipness.” Then expand from there. In this construct, you actually prefer the hallucinations and weirdness because they are more likely to provoke useful creative inquiry. In this scenario, the LLM operates a lot more like a creative partner who pulls a nonsensical idea from thin air only for you to realize it might just change the world.