023: But will you take advantage?

[After - Session 7] My bet: Our timeline will be defined by "Before AI-generative tools" and "After"

It’s unanimous. The readers of this newsletter want an executive summary at the top of longer posts. Consider it done. Thanks for voting!

TL;DR

This post contains links and references I couldn’t fit into Monday’s class

Plus reflection on ways in which my creative/artistic and strategic processes have been radically augmented

Learn how I made Deepfake Tim

What else can I do to make this newsletter better for you?

“I hadn’t thought of it that way before.”

In reflecting on this week’s major theme: Artificial Intelligence and Machine Learning, I unearthed an over-abundance of examples for a 2.5 hour session. In many cases, I realized I was experiencing a familiar reaction—what I refer to as the definition of an effective idea: “I hadn’t thought of it that way before.” The best insights, the best advertising, the best design, the best experiences cause that reaction. Broadly speaking, AI-generative technologies seem to have that effect, too. They are helping us to see with fresh eyes, to experience with different perspective, to think differently. And if that’s all there is, we’ve achieved something worthwhile.

And whoomp, there it is—via the ever resourceful Ethan Mollick at Wharton:

“…the productivity gains that can be achieved through the use of general-purpose AI tools like ChatGPT seem to be truly large. In fact, anecdotal evidence has suggested that productivity improvements of 30%-80% are not uncommon across a wide variety of fields, from game design to HR.”

Clearly there’s so much more to be understood, to be gained, to be tested, evolved, taught, debated, and made normal. But I agree with Mollick:

“Every worker should be spending time figuring out how to use these general-purpose tools to their advantage.”

Mollick points to research on AI’s potential impact on knowledge industries, like teaching. My dad taught my siblings and I the names of every U.S. President by having us sing a melody he created. I’m tempted to mash that up with either every President as a Pixar character or, every President, “but they’re all cool and they all sport a mullet.” AI-generative tech will clearly impact the future of advertising, but might also make U.S. history equally as thrilling, i.e. maybe more compelling? Hmmm… does James Madison need a second look? I guess we can’t expect Lin-Manuel Miranda to illuminate every historic figure. Cue the robots!

In addition to teaching I’ve been working on a client brand campaign. We’ve got four creative directions that hold water. So we’re in that space now where the strategy is solid and we’re building out TV, social, static/printed things and employee engagement tactics. The creative process is basically three acts: First you have a concept. Second you try to express the concept and gain consensus with your partners on concept efficacy and interpretation. Third you build and polish the ingredients for pitch theater.

Acts two and three have been utterly transformed by AI-generative tech. Expressing a concept, i.e. “a fish riding a bicycle” has historically been constrained by old world artistic talent. Can I draw it? Or can I persuade an artist to draw it for me? More recently, can I find images via search that closely represent my idea? At the end of the day, act two is only about legibility—can I translate what’s inside my brain onto a medium so you can see it, and comprehend what I imagine?

I might not be able to draw very well, but I can type.

Here’s a fancy example. Shaun Harrison has a movie idea—Bashenga: The First Black Panther. Maybe he can draw, maybe not? Regardless, he chose to use the AI-generative tool Midjourney (in addition to After Effects and Photoshop) to visually convey his concept. He typed prompts and Midjourney generated art. No doubt this generative tech saved Harrison lots of time getting through acts two and three of the creative process. And that’s entirely the point. Without generative tools, Harrison’s idea might never have seen the light of day.

I use Midjourney pretty much daily now.

My act two/three creative process has been radically improved. I augment word processing (my legacy skill) with AI-generative image prompting. It’s enhanced how I think about and work through expressing advertising concepts. Asking a machine to generate a visual based on my words—and being able to evaluate a result (in mere seconds!) is hugely informative.

I’m still not quite settled into AI-generative writing, however. I suspect it’s because I feel more capable as a writer, and don’t need/trust the tool? But I’ve been experimenting with Type.ai. I’ve got some ad headline writing tasks due next week, and will play around with generative writing.

Enhancing business analysis and marketing strategy

I will absolutely grant you the above result via Bing AI Chat is meh. But if you rarely perform S.W.O.T. analysis, or are overwhelmed, or didn’t get an MBA, then a generative result can be the beginning of a life or career saver/advancer.

To be clear: it took me longer to write the prompt than Bing to respond!

So, naturally, I asked a follow up.

We could keep going, Bing and I.

What used to take time and expertise now doesn’t have to.

To the cynical among us, remember Let Me Google That For You? Our excuses for not knowing things are getting fewer and fewer as AI-generative tools create access and enhance the means to perform basic strategic research.

I’m definitely using Bing AI more than ChatGPT to wrestle with business analysis, as well as audience insights and strategy. It’s also useful for survey, quiz and test construction. Here’s a pragmatic example: Using Bing AI you can interrogate a YouTube video while watching it.

We’re just getting started.

I Do Not Exist

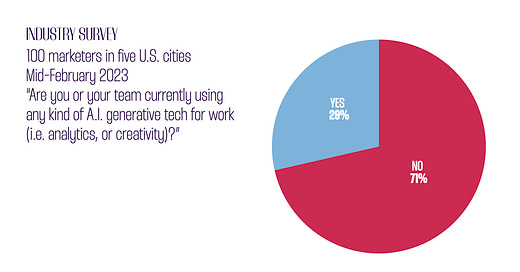

A couple weeks ago I moderated a MIMA panel discussion on AI-generative tech. To promote the event, I leveraged various tools to create Deepfake Tim.

Here’s how: I asked ChatGPT to write a first-person script introducing me and my thoughts on creativity fueled by artificial intelligence. I ended up editing the final script from six different iterations it generated because I’m a control freak. Then I gave ElevenLabs a sample of my voice (40 seconds of me reading random material), and ChatGPT’s script and asked it to generate “me” — which is the voice you hear in the video. Then I uploaded a photo (with my mouth closed) to D-ID, along with ElevenLabs’ .MP3 audio file, and a few minutes later, downloaded Deepfake Me telling you all about “my” perspective on AI-enabled creativity. The entire process took $11, and maybe 25 minutes. h/t to Professor Ethan Mollick for his tutorial.

The event was a success.

Of course we asked ChatGPT to recap. (The recap includes lots of useful links as well.)

More Links

The days of This Person/Cat/Rental Does Not Exist have transformed into AI-generative modeling. Also, AI-generative voice over. (I’ll be using that tool again today for a project.) I can see the case for generative characters (visual and verbal) in service of way finding, FAQs, and general queries. When all I’m trying to do is inform, generative tools (just like iPhone content capture or MP3s) will be “good enough.”

And what’s real, anyway? What if Life Imitates AI Art? (I’m still thinking “AI Fingers” is a decent band name. Or a detective.)

The End.

MCAD is on Spring Break next week, but this newsletter will keep on keeping on. Look for at least two posts—one Monday, one Wednesday. I’m taking Sunday off.