160: Mixed bag

Updates across Midjourney; Runway; Canva - plus AI ethics

I want to stop using the word “unprecedented.”

Yet, blink and you miss another flurry of AI updates. Never mind all the amazing creativity like the Tesco “logo” billboards, the Shaping Tomorrow video podcast from Hear Art focused on deaf creatives, Apple’s “Lost Voice” ad and Personal Voice technology, and Adam Ritchie’s Mission Control training program which seeks to, “demystifying the process behind idea creation” for those who don’t see themselves as creative. I applaud Adam for addressing, “one of the most pervasive myths in creative industries is that creativity is some kind of natural-born gift—a talent that only a few people possess.”

Anyway, there’s an AI-fueled capability I need, which I suspect already exists in crude forms but isn’t ready for prime time. It would review all my incoming sources (email, text, messaging) and web traffic (especially TikTok after 10pm) then distilled a “Here’s what the AI thinks Tim would really care about” result. Give me a Top Five (by type - i.e. Midjourney updates, accessibility news, etc.), or sort by the syntax of my current projects. This feels inevitable.

Which is another way of saying the broad umbrella of creativity and strategic thinking (circa late October 2024) is going through mind numbing change.

Thank goodness for Cometeer coffee. IYKYK. And music discoveries including Big Bill, Chinese American Bear, and Soft Launch. And

was right about that new Ladytron single.👏🏾 Oh and, yesterday was the one year anniversary of not getting screwed while buying a car.

Lots of Midjourney updates

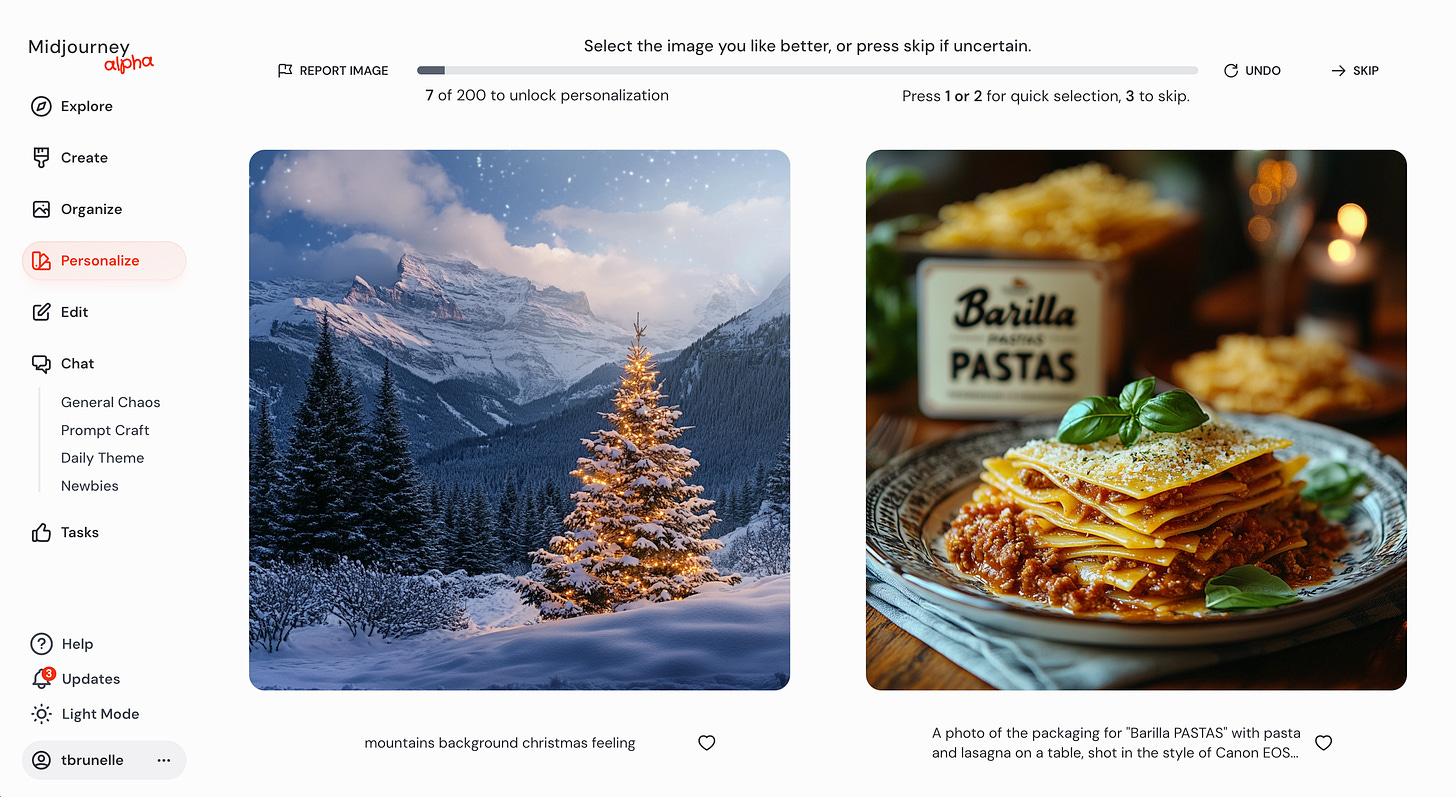

There were at least two intriguing updates within Midjourney this week. The first was a “personalization” approach, and the second a new way to leverage AI to work with uploaded images.

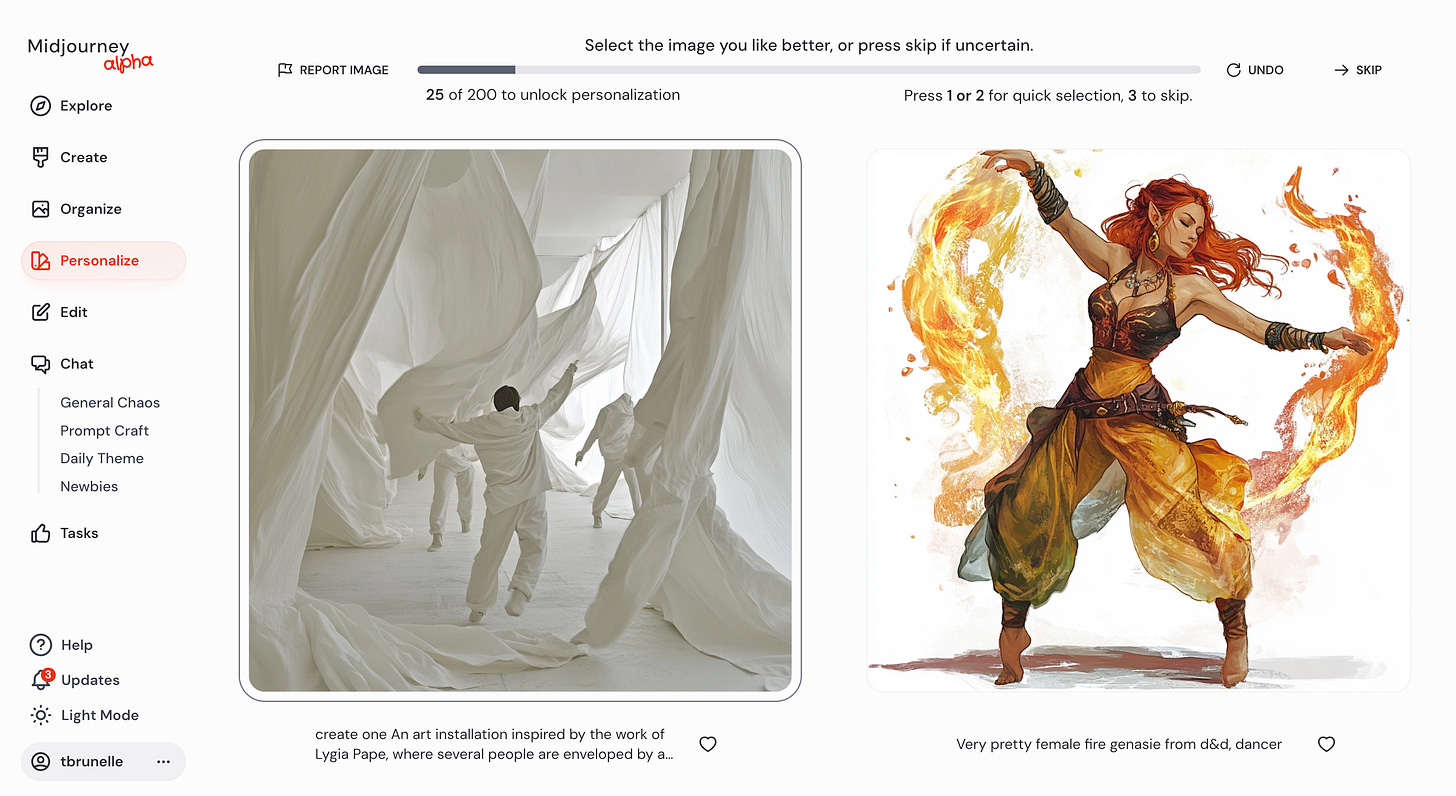

Personalization (more info here) takes advantage of your unique prompting and editing history, along with the results of a test. In short: Wouldn’t it be nice for Midjourney to remember your obvious and frequent preferences? On the one hand, sure, of course, nice. On the other, I can see how art directors who need to explore diverse styles might resist.

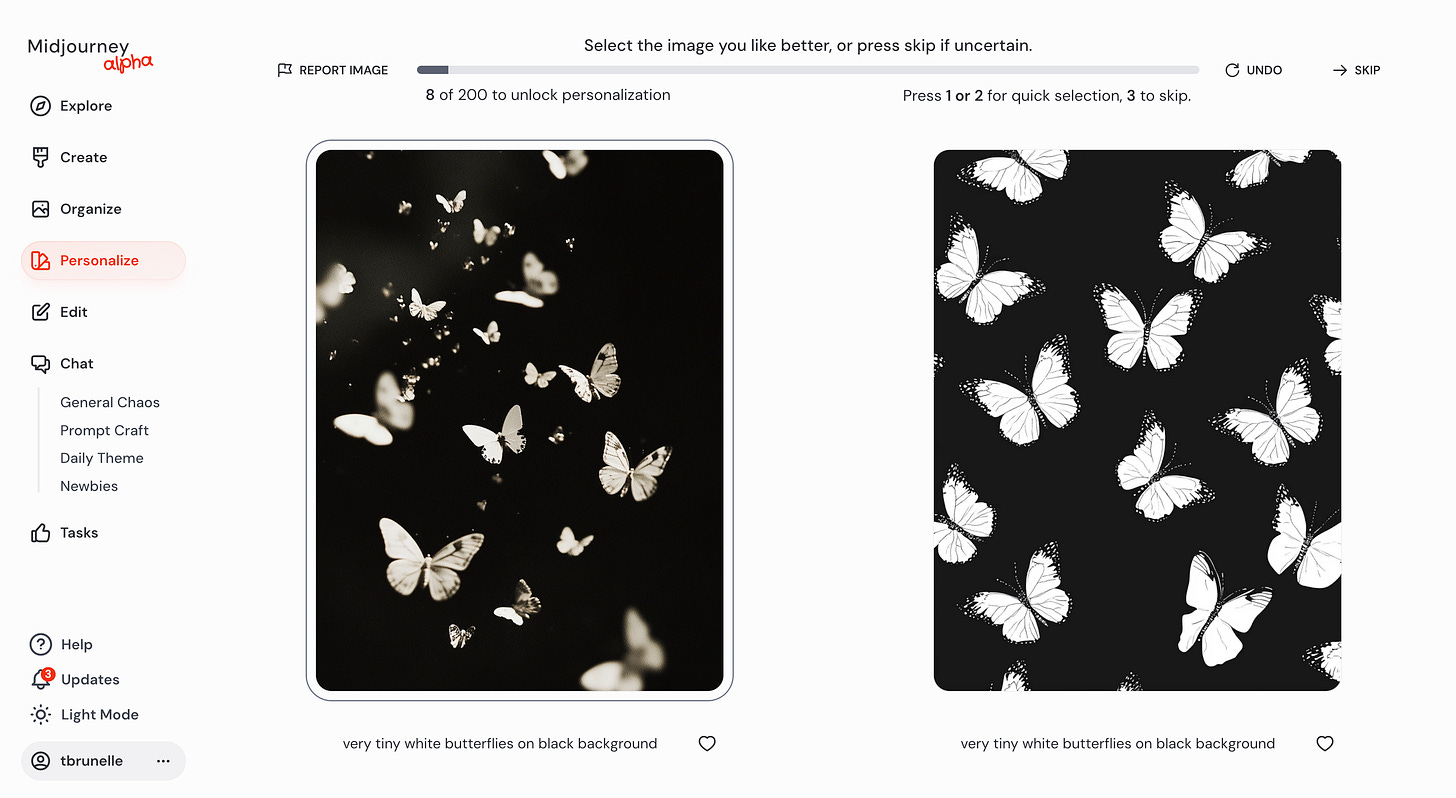

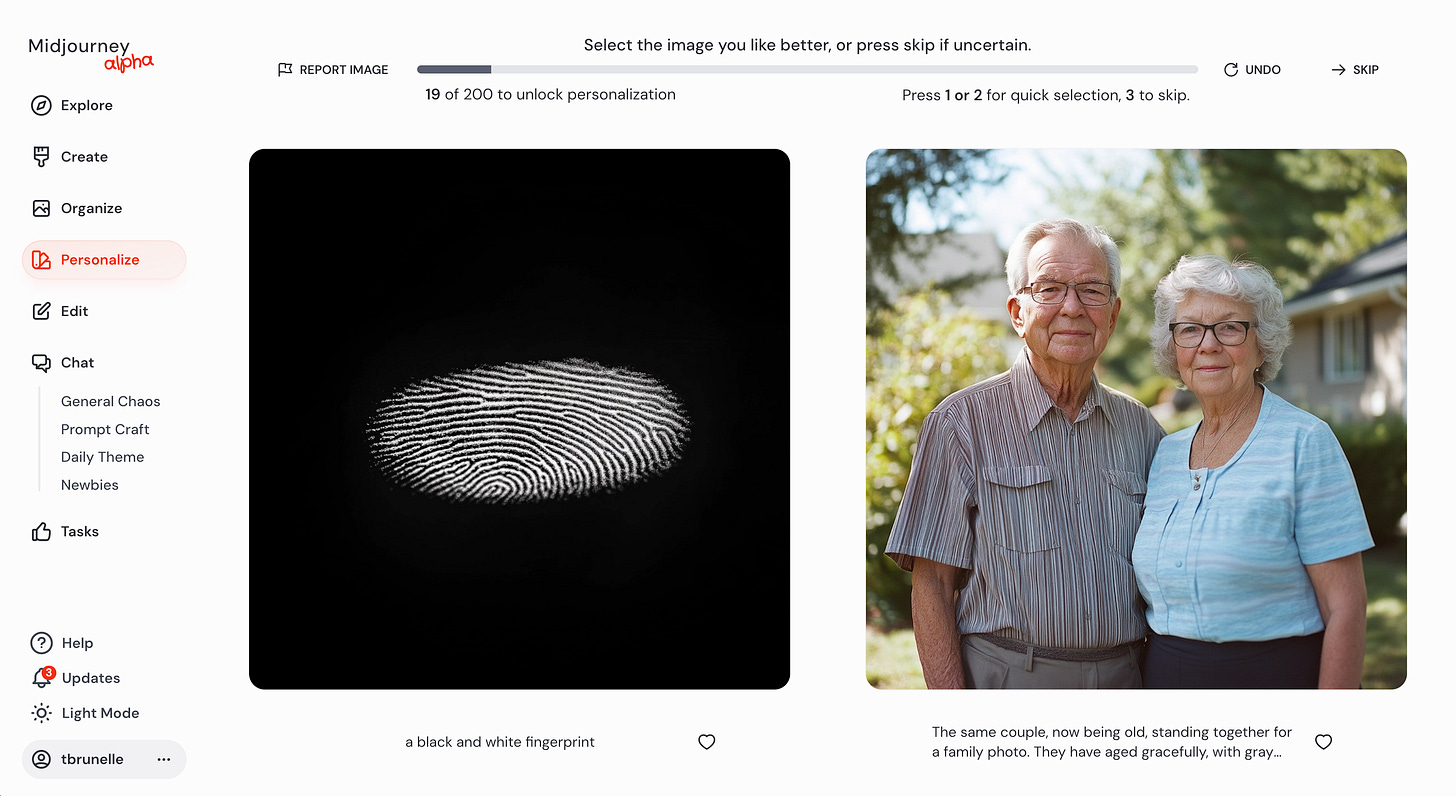

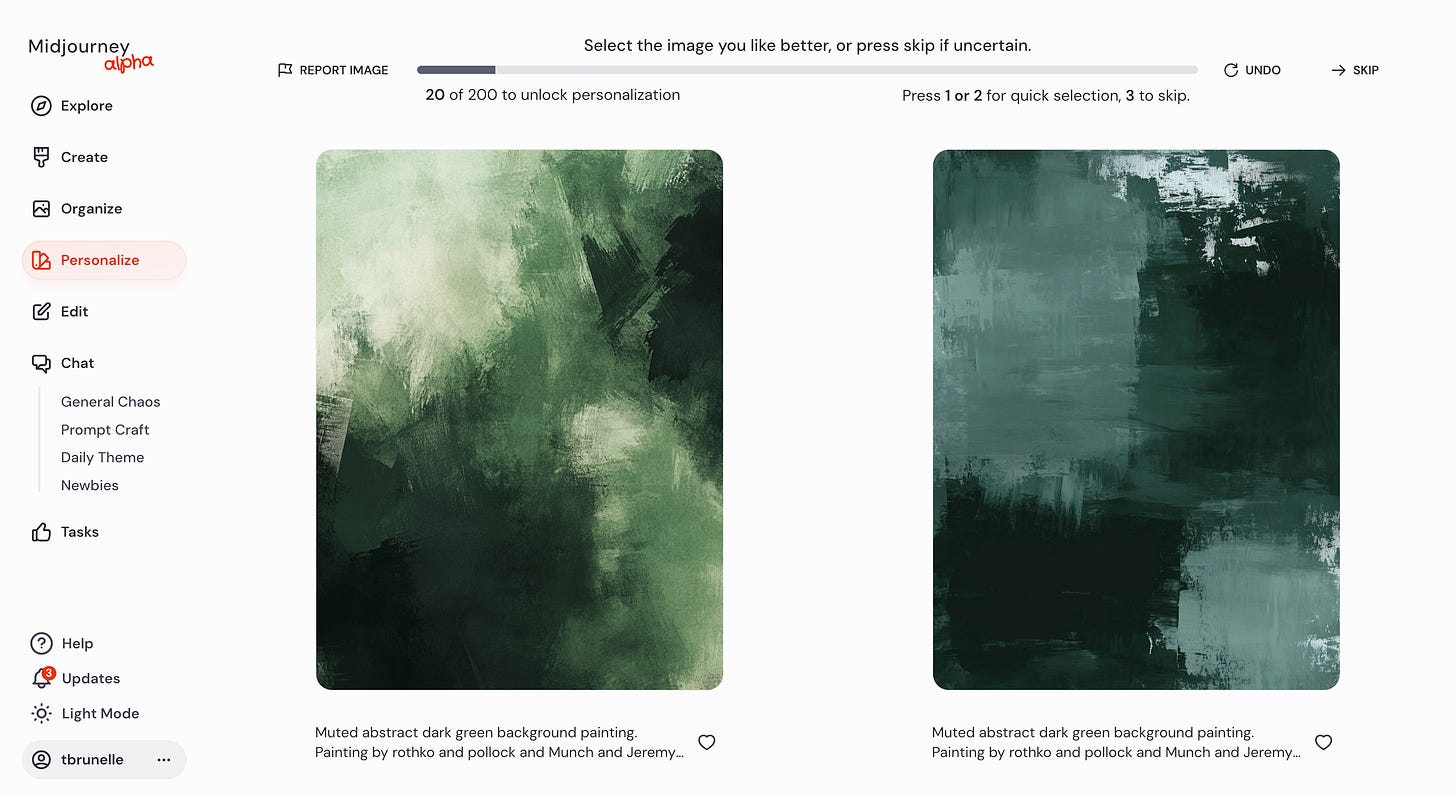

Here are a few grabs from my experience setting up personalization. Note the importance of skipping a pair; as well as the testing itself—sometimes the choices are radical, sometimes not! Not preferring is as important as preferring. I fully suspect your results will be wildly different than mine. Still, it would be interesting to see, for example, “people who selected image X also preferred image Y.”

In addition, Midjourney’s announced an External Image Editor function, which “lets you upload images from your computer and then expand, crop, repaint, add, or modify things in the scene.” They’ve also updated the V2 AI moderator—whatever that is, which “holistically examines your prompts, images, painting masks, and output images,” in order to, “reduce false positives and give people more freedom than ever.” Again, whatever that means.

But this editor function is amazing. Especially the ability to use an existing image or one you’ve edited as a reference to “retexture”—i.e. keep the library shape, the furniture positions, etc. but alter the style from mid-century to futuristic.

The Statement on AI Training

The conundrum is sad.

The British composer and former AI executive, Ed Newtown-Rex, founder of Fairly Trained, notes,

AI tech firms are, “willing to pay ‘vast sums’ for people and compute,” yet they, “expect to take training data for nothing.”

Fairly Trained leads a petition signed by over 11,000 individuals, including hundreds of well known creatives, reports The Guardian and TechCrunch.

🤖 =

🎨📷🎥🎭🎶 = nothing

I mean, are we surprised? Art and creativity have always been positioned by those outside the realm as a freedom of the mind, with an economic emphasis on “free.” Thank goodness for lawyers.

It’s clear the tech firms will use every means to resist, dodge, and distract from compensating humans for creativity which not only made AI possible, but fuels AI’s economic progress. It has always been, and is only, about the money.

Runway’s new character animation pipeline

A long time ago I was fortunate to spend months working with Psyop animating some spots for VW (which Peter DuCharme composed the music). I remember our producers continually reminding us animation takes the longest. I mean, apparently it takes 50 hours to render a single frame of a Pixar film.

Here’s where AI steps in to reduce complexity and speed up process.

“Our approach [is] driven directly and only by a performance of an actor and requiring no extra equipment,” announces Runway in debuting its Act One character animation capability.

Okay, so it’s going to be easier to animate a character—Adobe is following a similar path. Fine. But what this really points towards is creative exploration. Instead of having to choose a character or illustration style before you animate, Act One suggests that task shifts later into the process and enables an ability to test all kinds of options which were previously resource intensive.

Lots to think about here.

Canva rolls out all kinds of updates

I know a lot more marketers who use Canva than creatives. The genius of Canva boils down to templates and extensibility, especially if you’ve defined a brand’s style (fonts, colors, grid systems, logos) within the platform. My sense is the current and future state of in-house agencies should include a broad adoption of platforms like Canva for the diversity of sales enablement, HR and L&D tasks. In-house creatives set the boundaries, then everyone else is empowered to wreck them. I kid. I kid.

So it means something when generative AI flows seamlessly inside an environment like this. Canva just announced Dream Lab (solid reporting from The Verge).

TLDR: Solving creative problems through AI inside of platforms like Canva is getting more intuitive, with less friction and more actionable results.