156: Accounting for culture

When AI workplace theory meets water cooler reality; Hallucinations vs Evals; AI+Creativity updates

The kids are all right.

Especially if they’re making music in Argentina.

Like many on the Internets I am obsessed with Ca7riel & Paco Amoroso’s Tiny Desk performance.

This band is TIGHT. Javier Burin's keyboard solo and horn section soli on “EL ÚNICO” channels the best of Prince, Stevie Wonder, Tower of Power and Cory Wong. Based on this interview, it’s clear these kids are not kidding when it comes to skill, technique and soul. Here’s a useful deep dive from Jeanette Hernandez at Remezcla for those of us just getting up to speed, asking “espera, ¿qué es esto?”

👋🏽 Oh, and! I recorded a fascinating interview yesterday about music, creativity and AI. It’ll be out next week.

Is productivity all that happens when AI is infused in our work processes?

It’s amusing to look back on the late summer of 2022 when no one (except the real nerds) were talking about generative AI. I certainly wasn’t. We’re fast approaching the two year anniversary of ChatGPT’s arrival, and the subsequent dramatic changes—from curriculums to student behaviors in academia; procurement, process and L&D issues across corporate America; never mind the explosion of ways to approach and implement creativity.

Yet for all of the workplace studies, the surveys of humans apparently using AI at work for work, we seem to have established very little about what to expect. It feels like we want to know how this ends, and we’ve barely just begun. And the paintbrush we’re using to tell this story is perhaps a wrong one.

How we talk about—how we evaluate the value of—technology matters. As much smarter people have recognized, culture eats strategy for lunch. If our tech adoption strategy is rooted in old norms, old habits, then culture won’t evolve as optimally as we might like.

I’m reacting, specifically, to the Harvard survey linked above, and recounted by NPR’s Planet Money. The August 2024 survey data claims “U.S. adoption of generative AI has been faster than adoption of the personal computer and the internet.” Even further,

“we estimate that between 0.5 and 3.5 percent of all work hours in the U.S. are currently being assisted by generative AI.”

Fine. I fully agree. How does that figure align with your experience?

Then it leads to Planet Money’s money question: “What does this mean for the economy?” They make a specific correlation (bolding mine):

“Look at the smartphone. If I told you back in 2006, the year before the iPhone was released, that we'd soon all have supercomputers in our pockets, able to search the internet, give us precise directions to anywhere, send emails to and do video calls with co-workers and clients, order basically any product or service, translate languages and on and on — you might think we’d see an explosion in productivity. But smartphones seem to be more a tool for pleasure and distraction than an incredibly impactful work tool. We haven't seen a huge boost in productivity growth since its mass adoption well over a decade ago.”

And I completely disagree.

The “more labor done in fewer hours” paintbrush is an ineffective means of telling the broader story of productivity—as it relates to smartphones back then and AI now. Maybe it helps if we look at a chart.

In other words, ChatGPT helps you write that sales pitch faster, so you can write two sales pitches now in the same time as one used to take. I guess you could call that a productivity gain.

But what if you didn’t have to write the sales pitch at all to achieve a more profound effect?

What if, because of smartphones, you weren’t tied to a desk eight hours a day and could work in other locations? Might more and surprising benefits accrue than more labor in fewer hours?

It’s the “generating new ideas” bar I’m most interested in above. Another way of saying that could be my favorite, “what can we do as a result of AI which we couldn’t before?” Or as Microsoft AI CEO Mustafa Suleyman puts it in a recent blog post, “What matters is how [AI] feels to people and what impact it has on societies. It’s about how it changes lives, opens doors, expands minds and relieves pressure.”

Looking at AI through narrow lenses of the past, especially the lens rooted in industrial labor, doesn’t help us seek out, prepare for or benefit from capabilities we’re only now beginning to comprehend.

Hallucinations vs Evals

As we all know, lawyers and judges don’t like LLM hallucinations. So how did Casetext convince Thomson Reuters to spend $650MM acquiring its generative AI legal case management product last year? Turns out, not by “raw dogging prompts” or judging quality of output by vibes.

Instead, they wrote thousands upon thousands of code/prompt evaluations. They broke down elaborate prompts into tiny, discrete elements which could be evaluated—is this step correct, or not? This is the hard work we’re just now realizing might be necessary if and when LLM hallucinations are verboten.

Y Combinator interviewed Casetext CEO Jake Heller who paints a picture we don’t often see through the stereotypical lens of generative AI. Here’s the poignant excerpt related to building an LLM which apparently returns zero hallucinations—creating distinguishing value.

As Nate Jones reacted,

“AI systems must be instructed with extreme precision, at a granular level, to prevent mistakes. As tasks become more complex, developers need to break them down into smaller, understandable steps.”

In other words, AI isn’t a panacea, isn’t a magic cure-all. The solution for reducing or entirely removing those confident hallucinations boils down to engineers and product managers focused on time-consuming detail.

AI+Creativity Update

🤖📋 ChatGPT has introduced Canvas. I’m playing around with it, and will share deeper thoughts soon. In short—imagine a more interactive UX for co-creating/editing with AI. Looks promising.

🤖 Meta recently rolled out more enhancements to its AI toolkit. Remember, there are hundreds of millions of users inside Meta; many of whom are just coming to grips with AI. 1) Now you can chat with Meta’s AI to edit images. “Make the background blurry.” This is about making AI use useful, seamless, and boring. AI is becoming utility. 2) They’re testing automatic dubbing and lip synching. Imagine your Reel’s originally in English. You only speak English. But now, through AI, you appear to speak Spanish fluently. This could be huge.

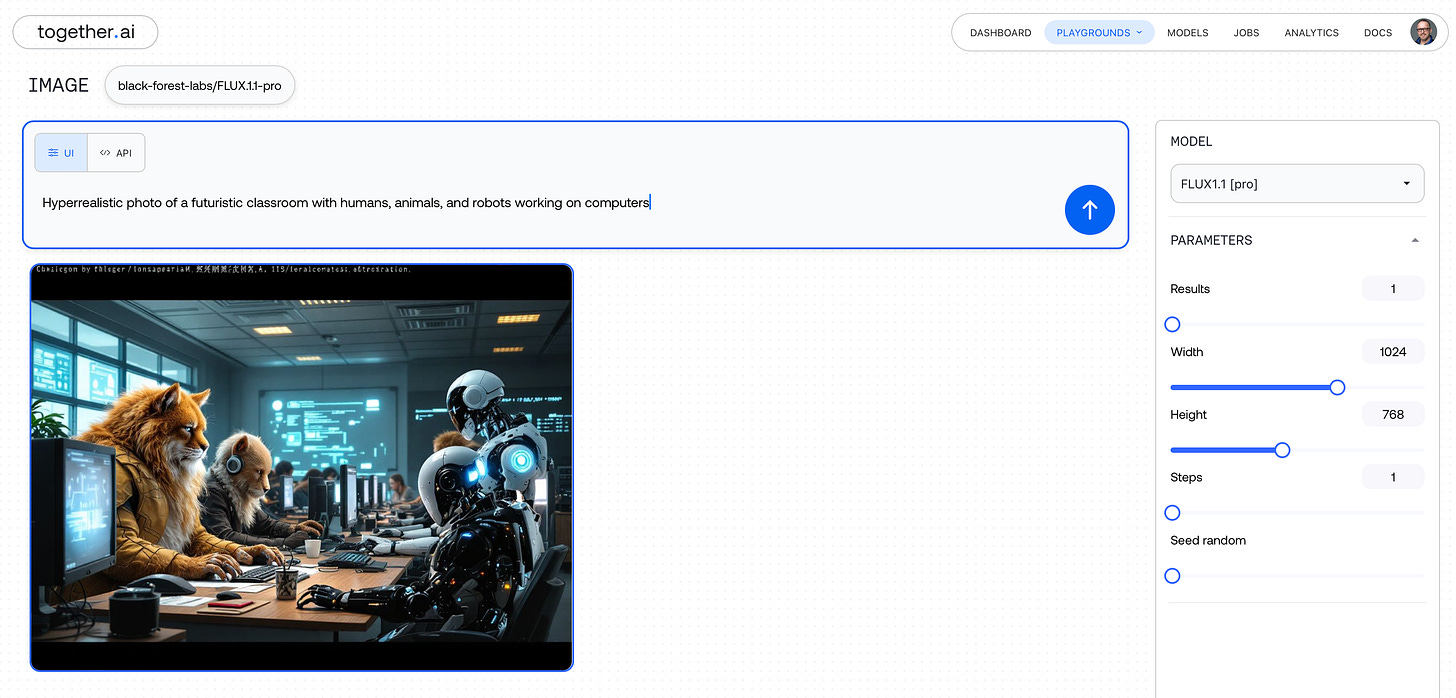

🤖🎨📸 The Flux text-to-image generator has been upgraded. BlackForestLabs rolled out its 1.1 model. You can play around with by opening an account at together.ai, Replicate, fal.ai, or Freepik. It’s really fast. And my quick few tests suggest image quality beats Firefly, and competes with some Midjourney and Meta (Llama) results.

🧸🎉 Speaking of music, there’s this genius record release anniversary idea. (Thanks, Greg!)

✏️🎉 Speaking of anniversaries, the OG blogger Dave Winer just celebrated his 30th year of writing online. He’s a hero. Congrats, Dave!