🐘 Does King Crimson’s song Elephant Talk get stuck in your head like it does mine? I have to admit I’m very excited to see the BEAT tour with Adrian Bellow, Steve Vai, Danny Carey and Tony Levin—who’s 78 and still touring! This interview with Tony starts slow but delivers a lot of amusing detail, especially about his tour photo albums.

Meanwhile, there’s been lots of news and conversation this week around generative AI.

🤖🎥 They said “designed to be commercially safe” twice

Adobe Firefly is promoting new video capabilities “available later this year.” More details via The Verge. It’s very clear Adobe is preaching to legal and others who are concerned about copyright-ability, ownership and attribution.

The outputs look at least as compelling as Runway and what OpenAI is promising with Sora. Adobe’s advantage is their install base among creatives, and (in theory) the ability to smoothly integrate what you generate into an existing edit timeline.

🤖🍓 OpenAI’s ChatGPT o1-preview is live

It’s currently only available to Plus and Teams users of ChatGPT. It doesn’t have access to browse the web, or process files or images.

But it appears to have some ability to “reason.”

And while you or I might not find the newest iteration of OpenAI’s LLM all that exciting, it does illuminate a useful path forward. As The Verge described the contrast, current day LLMs are, “essentially just predicting sequences of words to get you an answer based on patterns learned from vast amounts of data.” But o1-preview takes a different approach, incorporating reinforcement learning as well as “chain of thought” processing to arrive at an answer to your prompt. Wired explains:

“LLMs typically conjure their answers from huge neural networks fed vast quantities of training data. They can exhibit remarkable linguistic and logical abilities, but traditionally struggle with surprisingly simple problems such as rudimentary math questions that involve reasoning.

Murati says OpenAI-o1 uses reinforcement learning, which involves giving a model positive feedback when it gets answers right and negative feedback when it does not, in order to improve its reasoning process. ‘The model sharpens its thinking and fine tunes the strategies that it uses to get to the answer,’ she says.”

Ethan Mollick suggests “fun things to do with your limited 01-preview” including “Give it an RFP and ask it to just do the work.”

I asked ChatGPT’s o1-preview to first write itself a challenging prompt in the realm of marketing and advertising, then respond. Is the output stunning? Of course not. But 16 seconds later I got a decent outline for a marketing campaign with a few hints of potential ideas. Certainly more actionable than an entry-level agency account executive might output without AI, and a few hours or days of time to work. The point being—this tool is getting more nuanced, and is gaining greater ability to work alongside strategic and creative thinkers more effectively. If you have access to it, you should be playing around with it.

🤖🇫🇷 Bonjour, Le Chat

Via AI Tool Report, “French AI start-up, Mistral, has released its first multimodal model—Pixtral 12GB—capable of processing and understanding both images and text.” Sign up and access Le Chat here.

Here’s another competitor to Claude, ChatGPT, Perplexity, Gemini, et al. Is it better? You’ll have to play around with it and decide how its personality and processing fit your needs, or not.

And that’s kind of the point right now—as users, we should be experimenting far and wide, using the same prompt across multiple AI to discern how each might work for us.

🤖🚕 Waymo fewer accidents?

It seems inevitable.

Especially if, as the data reported by Neuron Daily suggests, “Waymo’s injury crash rates are approximately 60-70% less than human drivers.”

Idea #1: It’s always about the money. It will be the insurance companies offering massive discounts for using AI-operated vehicles, while simultaneously upping rates for any human who still needs to drive, which flips reality.

Idea #2: Suddenly, distracted driving becomes a good thing. Getting people to embrace AI taking the wheel could be rooted in enabling all the distractions human drivers get penalized for doing.

Idea #3: Access to your choice of AI-driven vehicles via subscription. Guaranteed availability and “uptime.” Owning or leasing a car goes the way of the landline.

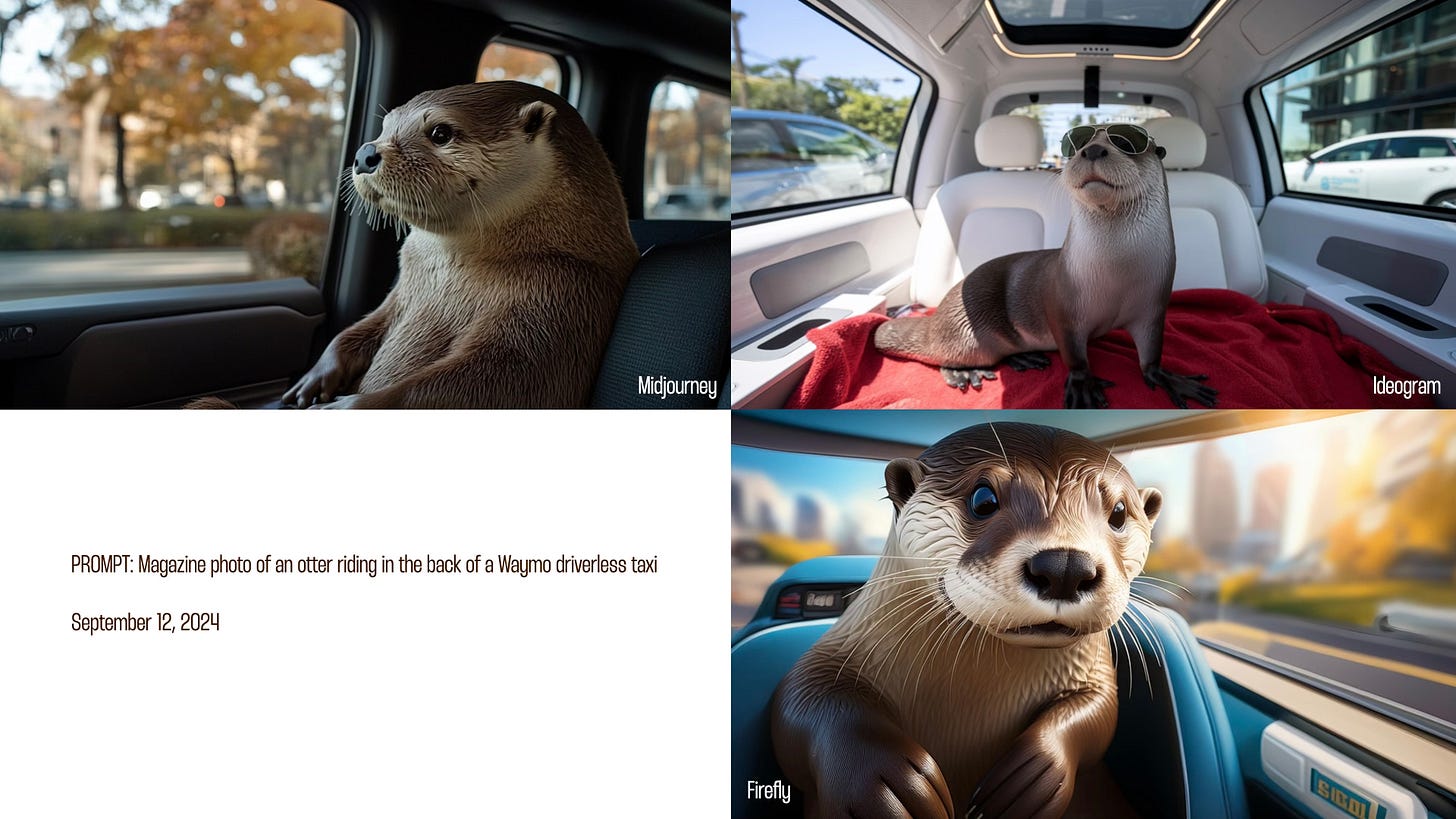

🤖🎨 Ideogram is an appealing alternative for generative AI

Midjourney, Firefly, Meta, et al have competition with the latest Ideogram offering.

🍎 Finally, someone is making AI normal and maybe even boring

I watched the whole thing on Monday.

I haven’t watched an entire Apple event in years. I miss the live speaking, but honestly, the production value was amazing, nothing broke, and we got to see all the details perfectly. Covid changed a lot of things, especially live events.

But first…

FDA-approved AirPod hearing aids? Sold.

This is a game changer. My family has a lot of experience with hearing aids. They are expensive. They are difficult to connect to other devices. If Apple can solve all that, we’re in. The FDA granted approval yesterday.

But there’s an even bigger market: All of us in every loud setting. Or Gen Xers like me who played in too many bands without hearing protection.

I also think this shift from listening to hearing will be huge.

As an example, my teens both wear their AirPods all the time—in the house, in the car. And it kills me. I take the visual of pods-in-ears as an insult to being present. They’re just listening. But what if my framing shifted, and I took the presence of AirPods as a signal of hearing enhancement? You wearing AirPods means I’ll be more clearly understood (and not just blocked out). This, combined with Apple’s sleep apnea diagnostic helps expand their brand framing beyond devices into services and increased relevance in health.

Apple Intelligence = “AI for the rest of us”

There’s that old Mac computer headline: “The computer for the rest of us.” In many ways, my take on Apple Intelligence follows a related path.

It’s very clear Apple is taking AI very seriously. But that seriousness isn’t translated into shipping feature after feature quickly (see so much of the above), but on clarifying purpose. Two years since ChatGPT, many are still asking—what is all this AI for?

I applaud Apple for what appears to be significant thinking to answer that question. They’re trying to discern purpose for technology which has wildly expansive, often unknown capabilities, but would be applied within their very controlled and proven environment. I can imagine Craig Federighi asking, “How’s my mom going to use AI—without having to understand AI?” And what was revealed earlier this week wasn’t clear, product features, but clearly defined use cases.

In many ways, what Apple is trying to do is make AI invisible. To make it simply a part of how you naturally experience your devices and applications without necessarily calling attention to AI as the enabler of those experiences. And this strategy will help address a lot of the frustrations and misconceptions I’ve experienced in the last two years of evangelizing, training and coaching people around AI.

The key ingredients I noted were:

Personal context understanding — AI that is “unique to you” for “everyday experiences.” This is less about what newfangled video you might generate, and more about how you can integrate and leverage existing data, i.e. “Play that podcast episode Joseph told me about,” or “find the part of the video where Haley is laughing.” If that’s AI, that’s useful.

Visual intelligence — Being able to point a camera at a location or object and glean information about it will be huge. Yes, OpenAI demonstrated this first, but Apple will make it seamless and normal. Decades ago we talked about “hyperlinking” the real world. This is exactly that, and it’s going to be profound.

Summarization & Integration — Again, the summarization piece is nothing new technically speaking from what we can do inside all the major LLMs. But when you apply Apple’s approach and context, the difference becomes clearer. An email summary i.e. “campaign is approved, book flights” is so much more advantageous than four preview lines, i.e. “The client called back. Blah blah blah the information you really need is buried here somewhere.” And because Apple Intelligence works within iOS, it can spark action across different applications. Helping me parse the deluge of inputs, then surfacing potential actions is great use of AI.

Don’t get me wrong.

I love the jagged edge of AI. I enjoy wrangling prompts to generate remarkable results. But I also recognize how distanced the majority of generative AI has been from pragmatic, commonplace solutions. Apple envisions AI as plumbing, not as an imaginative castle. And it’s their ability to embed complex technology to unlock and enhance everyday tasks which sets their approach apart.

Of course, we’ll have to jump in and use it for real, starting early October. But the pitch was compelling.

Last but not least…

Were you addicted to watching The Sopranos like I was?

Sunday nights. HBO. And then Monday back in the office to gossip about David Chase’s writing, Alik Sakharov’s cinematography, or the fabulous acting ensemble. Well there’s a brilliant new documentary on the inspiration and making of The Sopranos. More background via Bob Lefsetz.