118: Learning GenAI choreography

[During - AI for Artists and Entrepreneurs] Adobe's Kris Kashtanova came to class

The cartoon above reminds me of an exercise we’d perform in the “everyday improv” classes at the Brave New Workshop. Puppy, Jupiter, Birthday, Mystery, Mermaid, Hemingway = Invent a Story! Humans have been mashing up and cross-referencing forever. Now you can sort of do it with a computer.

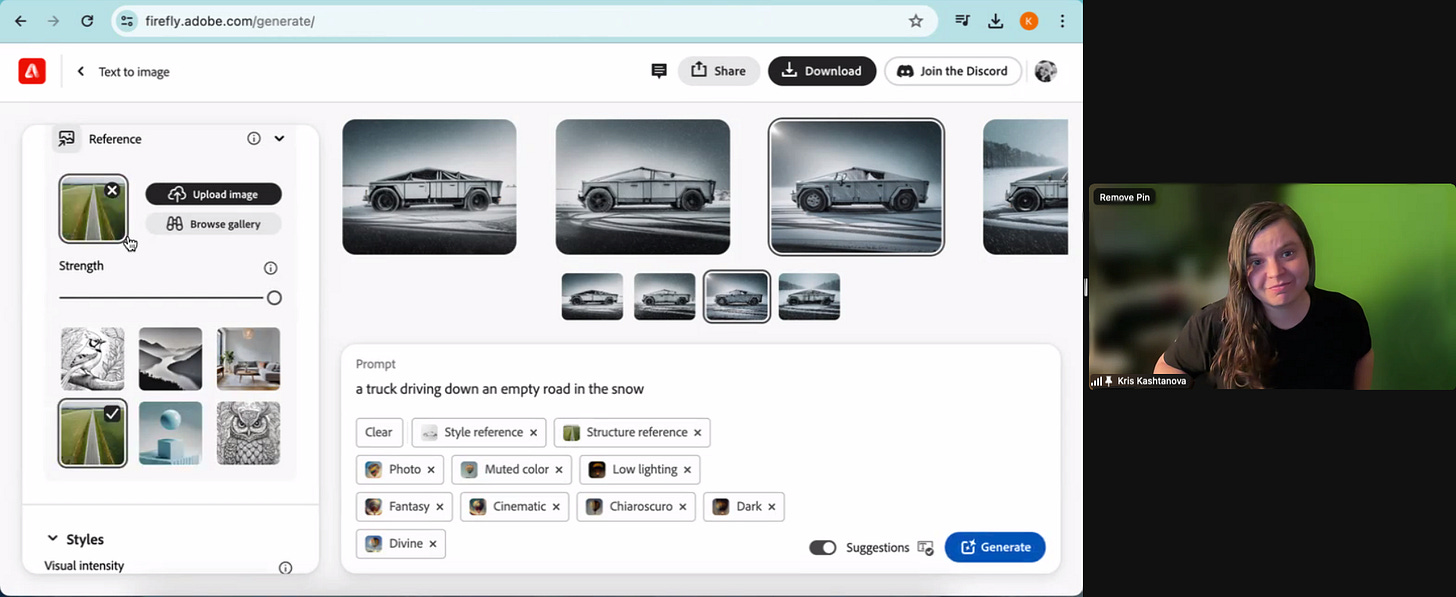

Thank you, Kris Kashtanova

“AI is like musical instruments—without an idea it’s just equipment.”

The story of generative images in the past 16 months has been loud fits and bursts, tiny enhancements blasted across social as world-changing revolutions. “OMG --cref!” And then there’s Adobe.

The firm seems to recognize their audience—designers with not enough hours, not enough clarity, and a nagging suspicion about the mental acuity of their co-workers. So Adobe iterates carefully. Firefly wasn’t first. It wasn’t cutting edge. But it’s thorough, and it’s integrated. In this newfangled reality, that approach will sustain.

An unsung component of Adobe’s perseverance has been its army of ambassadors, trainers and evangelists. People like Kris Kashtanova (LinkedIn), a software engineer and photographer, with a curiosity for the peculiarities of art meets tech. Many thanks to Kris for joining MCAD’s AI for Artists and Entrepreneurs class last night. She helped us contemplate nomenclature and nuance—i.e. Firefly sees a significant difference between the words “product” and “macro” in an image generation prompt. Or did you know Adobe doesn’t alter a reference image you upload, but Midjourney does?

We spent a lot of time discussing two tools: Structure and Style. Within Firefly, the first refers to the DNA or architecture of an image—its shapes, perspective and grid. Much can be achieved by uploading line drawings to aid Firefly’s generation. Style refers to the facade, or surface on top of the Structure—its texture, lighting, color. Kris recommended creating and uploading Photoshop “bashes,” i.e. collage, to tune Firefly’s output. Both include the notion of Strength, which suggests how “much” Firefly should reference or indulge a given input. And finally—iterate over and over, over and over, over and over. As impressive as Firefly can appear on its surface, the pros know real benefit comes from lots and lots and lots and lots of trial.

We’re beginning to see a professionalization of GenAI as the knobs and dials to operate outcomes are evolved and incorporated into long-existing systems like Photoshop and Premiere. (Adobe just announced a slew of new GenAI video capabilities.) But the benefits you receive will come first and foremost from the effort you put in.

AI+Creativity Update

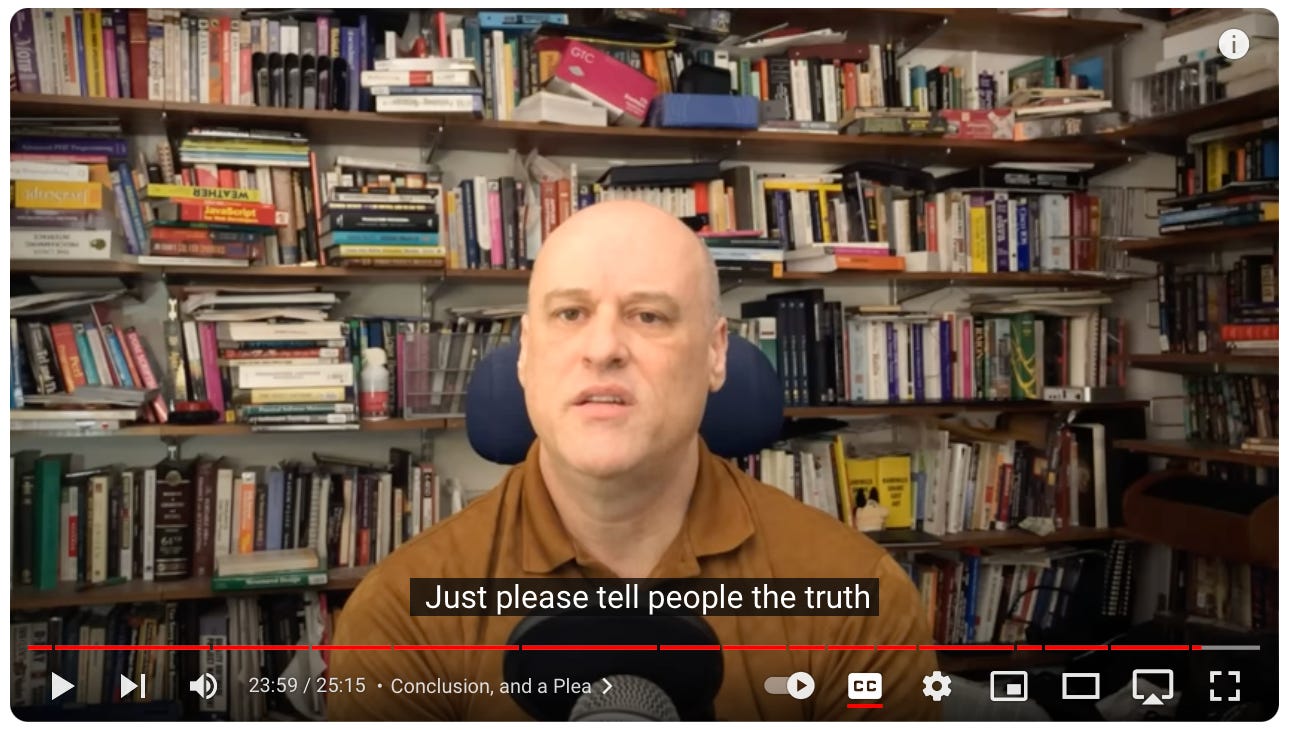

🤖😟 Oh, Devin. A couple weeks ago there was much ado about Devin, the coding AI from Cognition Labs. “You don’t have to know how to code to code!” But thanks to the Internet, mostly Carl from Internet of Bugs (seen above), we learned the demo videos were…less than honest? 🤦🏻 Of course! As a branding person, it boggles the mind—when will tech brands learn you can’t fake the demo video? From the comments: “I really hate how normalized faking it in demos has become.”

🧴 Need to place existing product images into generative backgrounds? One of my students did, and pointed me to Flair AI. The outputs have been impressive! (And one suspects this sort of capability will become common inside Adobe soon enough.)

👑 NYU Stern Dean of Students Conor Greenan suggests English majors will prevail in the age of GenAI—it’s not about coding ability, it’s about the ability to empathize and reason.